Understanding Basics

Sigmoid Function

Imagine you have a dimmer switch for a light. On one end (let's say all the way to the left), the light is completely off (0). On the other end (all the way to the right), the light is super bright (1). In between those extremes, the dimmer slowly turns the light on brighter and brighter.

The sigmoid function is kind of like a dimmer switch for numbers! It takes any number you give it and squishes it between 0 and 1. Numbers much lower than zero will get really close to 0, while numbers much higher than zero will get really close to 1. Numbers in the middle will be somewhere between 0 and 1, depending on how close they are to zero or one.

Think of it like a slide. If you start way at the top of the slide (a very high number), you'll end up really close to the bottom (close to 0) after going down. If you start way at the bottom (a very low number), you won't move much at all (stay close to 0). But if you start somewhere in the middle, you'll end up somewhere in the middle between the top and bottom (between 0 and 1).

The sigmoid function is useful for computers because it can take any kind of number and turn it into a value between 0 and 1, which is helpful for making decisions. It's like a special translator for numbers!

How it Works

The sigmoid function works like a magic machine that squishes any number you give it between 0 and 1. Here's a peek behind the curtain:

The secret sauce: It uses something called the exponent (like a fancy power button) and a special number called "e" (around 2.7). It takes your number, wraps it in a negative sign, and shoves it into the exponent.

Why the squish? The bigger your number is (positive or negative), the bigger the result of the exponent gets. Remember, bigger exponents make numbers grow really fast! This makes the output either very close to 0 (for very negative numbers) or very close to 1 (for very positive numbers). Finally, the function flips everything upside down with a fraction (1 divided by everything) to squeeze the output between 0 and 1.

The formula: While the full formula (1 / (1 + e^(-x))) might look scary, all it's doing is those steps above. Don't worry about memorizing it, but think of it as the magic recipe for the squishing machine!

Remember: The sigmoid function is like a helpful tool for computers. It takes any number and translates it into a value between 0 and 1, which makes it easier for computers to make decisions in certain situations.

Interview POV

In an interview setting, here's a breakdown of how you can explain the sigmoid function and its role in logistic regression:

Sigmoid Function:

- The sigmoid function, also known as the logistic function, is a mathematical function that takes any real number as input and squishes the output between 0 and 1.

- Imagine it like a dimmer switch for numbers. Numbers closer to negative infinity are mapped close to 0, while numbers closer to positive infinity are mapped close to 1. Values in the middle are mapped somewhere between depending on their distance from the extremes.

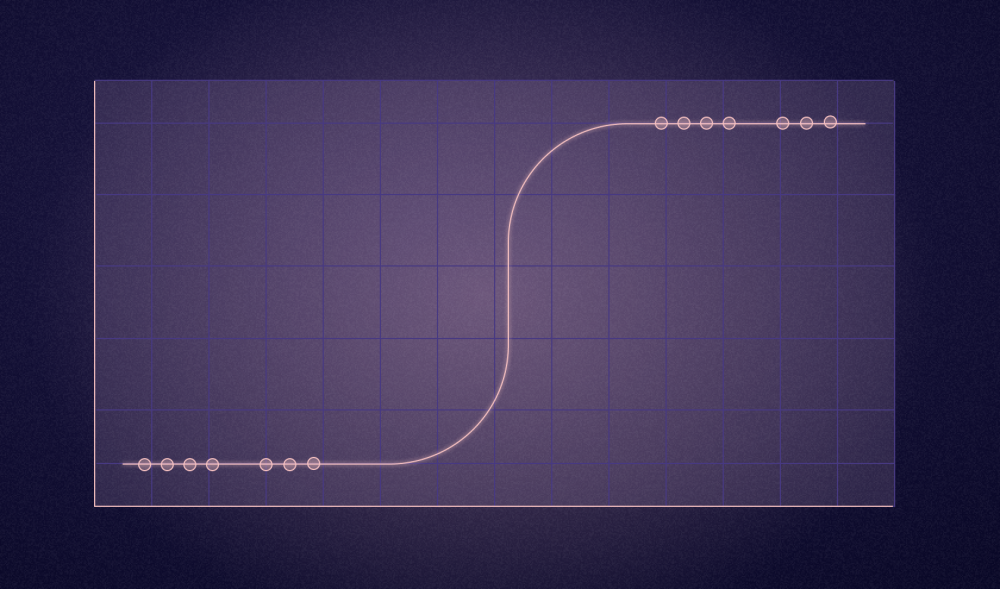

- This "S" shaped curve ensures the output represents a probability between 0 (impossible) and 1 (certain).

Use in Logistic Regression:

- Logistic regression is a machine learning algorithm used for binary classification problems (predicting one of two classes).

- The model generates a score based on the input features. However, this score wouldn't directly tell us the probability of belonging to a specific class (e.g., email being spam or not).

- The sigmoid function comes in here! It takes the score from the logistic regression model and transforms it into a probability between 0 and 1.

- This allows us to interpret the output as the likelihood of an observation belonging to a particular class. For example, a sigmoid function output of 0.8 indicates an 80% chance of belonging to class 1.

- We can then set a threshold (commonly 0.5) to classify the data point. Values above the threshold are assigned to class 1, and values below are assigned to class 0.

Bonus points:

- Briefly mention that while sigmoid is common, other activation functions might be used in logistic regression depending on the situation.

By explaining the sigmoid function's behavior and its crucial role in converting a linear model's output into interpretable probabilities for logistic regression, you'll demonstrate a strong understanding of this essential concept.

Interpretation of Coefficients

What's this?

Imagine you're making a lemonade stand. You want to know how much lemonade to make so you don't run out or have a ton leftover. To figure this out, you might consider things like:

- Sunshine: The brighter the sun, the more people will want a cool drink, so you might make more lemonade.

- Temperature: The hotter it is, the thirstier people will be, so again, more lemonade!

Logistic regression is kind of like your lemonade stand, but instead of predicting how much lemonade to make, it predicts the chance of something happening. It uses numbers representing different things (like sunshine and temperature) to guess how likely something is, like someone buying lemonade.

The coefficients in logistic regression are like little dials for each of those things. Turning a dial up (positive coefficient) means that thing makes it more likely something will happen (like buying lemonade). Turning it down (negative coefficient) means it makes it less likely.

For example, if the sunshine dial is turned way up (high positive coefficient), it means a really sunny day makes it very likely people will want lemonade. But if the temperature dial is barely turned (close to zero coefficient), it means temperature doesn't have a big impact on whether someone buys lemonade.

Remember, these dials only affect the chance, not a guarantee. Even on a super sunny day, some people might not want lemonade, and even on a cool day, someone really thirsty might buy some!

How it works

Logistic regression uses math to understand the relationship between different factors (independent variables) and a single outcome you want to predict (dependent variable), but instead of a direct numerical prediction, it gives a probability between 0 and 1. Here's the breakdown of the math behind coefficients:

The Model: The core of logistic regression is a linear equation that combines the independent variables (let's call them X1, X2, etc.) with their corresponding weights (coefficients - denoted by β1, β2, etc.) and a bias term (β0). This equation looks like:

linear_model = β0 + β1 * X1 + β2 * X2 + ... (more terms for other variables)

The Sigmoid Function: As you know, the linear model above just gives a numerical output. Here's where the sigmoid function (σ) comes in. It takes the linear model's output and squishes it between 0 and 1, representing the probability (p) of the desired outcome. This conversion is done using the formula:

p(y = 1) = σ(linear_model) = 1 / (1 + e^(-linear_model))

Understanding Coefficients:

- The coefficients (β values) tell you how much each independent variable (X) influences the predicted probability.

- Positive coefficient (β > 0): If a coefficient is positive, it means that increasing the value of that variable (X) will lead to an increase in the predicted probability (p).

- Negative coefficient (β < 0): Conversely, a negative coefficient indicates that increasing the value of that variable will decrease the predicted probability.

- Magnitude of coefficient: The larger the absolute value of the coefficient (|β|), the stronger the influence of that variable on the probability. A small coefficient suggests a weaker influence.

Example:

Imagine you're predicting the probability of a customer buying a product based on their age (X1) and income (X2).

- If the coefficient for age (β1) is positive, it means older customers (higher X1) are more likely to buy (higher probability).

- If the income coefficient (β2) is negative, it suggests higher income (higher X2) might make them less likely to buy (lower probability).

- The magnitude of each coefficient tells you how much age and income affect the purchase probability. A larger coefficient for income might indicate it has a stronger influence than age.

Important Note:

While the formulas might seem complex, the key takeaway is that coefficients act like dials adjusting the influence of each variable on the final probability prediction in logistic regression.

Interview POV

In an interview setting, explaining coefficients in logistic regression goes beyond a basic understanding. Here's how you can craft a strong response:

Coefficients in Logistic Regression:

- Logistic regression uses coefficients (β values) to quantify the relationship between independent variables (X) and the predicted probability.

- These coefficients act like weights, indicating how much each variable influences the model's output probability between 0 and 1.

Interpreting Coefficients:

- Positive coefficient (β > 0): A positive coefficient suggests that increasing the corresponding independent variable (X) leads to a higher predicted probability for the target class (e.g., email being spam).

- Negative coefficient (β < 0): Conversely, a negative coefficient indicates that increasing the variable decreases the probability of the target class.

- Magnitude of coefficient (|β|): The absolute value of the coefficient reflects the strength of the influence. A larger |β| signifies a stronger impact of that variable on the model's prediction. Coefficients closer to zero suggest a weaker influence.

Example:

Consider predicting loan approval based on income (X1) and credit score (X2).

- A positive coefficient for income (β1) implies higher income (X1) increases the probability of loan approval.

- A negative coefficient for credit score (β2) suggests a lower credit score (X2) decreases the likelihood of approval.

- The magnitudes of β1 and β2 reveal the relative importance of income and credit score in the model's prediction.

Beyond Interpretation:

- Coefficients are crucial for model interpretability. They help us understand which factors significantly impact the predicted probability.

- We can use techniques like feature scaling to ensure coefficients are on a comparable scale for better comparison.

- However, it's important to remember that coefficients represent the average effect within the data and might not perfectly explain individual cases.

By explaining the role of coefficients in quantifying variable influence, their interpretation through positive/negative signs and magnitudes, and their significance for model understanding, you'll demonstrate a solid grasp of this core concept in logistic regression.

Linear Regression vs. Logistic Regression

Here's a breakdown explaining the key differences between Linear Regression and Logistic Regression in a way that's easy to understand:

Imagine you're a superhero trying to predict stuff...

- Linear Regression Superhero: This superhero is good at predicting continuous values, like how strong a villain will be based on their size (think Incredible Hulk) or how far you can fly based on the amount of jet fuel in your jetpack. They use a special formula to find a best-fitting line that represents the relationship between things.

- Logistic Regression Superhero: This superhero focuses on predicting probabilities of events happening, like the chance of a villain escaping based on their past escapes (think Loki) or the probability of rain tomorrow based on today's humidity. They use a special tool called the sigmoid function to turn their predictions into probabilities between 0 (never happening) and 1 (absolutely happening).

Key Differences:

- What they predict: Linear Regression predicts actual numbers, while Logistic Regression predicts probabilities (0 to 1).

- Output: Linear Regression gives a continuous line, while Logistic Regression gives an S-shaped curve (sigmoid function).

- Applications: Linear Regression is good for things like weather forecasting (predicting temperature) or sales figures (predicting total sales). Logistic Regression is useful for tasks like spam filtering (predicting spam email) or loan approval (predicting chance of approval).

Bonus:

- Both superheroes use fancy formulas, but Linear Regression's formula is simpler and aims to create a best-fitting straight line. Logistic Regression's formula is more complex and uses the sigmoid function to transform its output into probabilities.

Remember:

- These superheroes have different strengths! Choose the one that best suits your prediction task - continuous values or probabilities.